Docket #: S18-093

Efficient, Scalable Training of Artificial Neural Networks Directly on Optical Devices

Engineers in Prof. Shanhui Fan's laboratory have developed an efficient, scalable, in-situ method to train, configure and tune complex photonic circuits for artificial intelligence and machine learning. This technology adapts a conventional numerical algorithm (backpropagation) so that it can be implemented experimentally directly on an optical chip. Those physical experiments then provide the input for optimizing the chip toward any designated function. Because photonic circuits are faster, less computationally expensive and more energy efficient than traditional electronic computers, this optics-only in-situ approach is more efficient than conventional training that uses a computational model on an external computer. In addition, the method can be scaled to a large network because it tests and tunes parameters in parallel rather than using brute force serial techniques. This method was originally designed to train artificial neural networks (ANN) in deep learning applications. It could also be utilized to create other complex, adaptive nonlinear photonic circuits with end-user applications such as LiDAR, laser accelerators and optical communications.

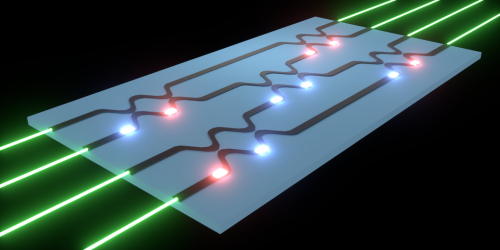

Figure description - Researchers have shown a neural network can be trained using an optical circuit (blue rectangle in the illustration). In the full network there would be several of these linked together. The laser inputs (green) encode information that is carried through the chip by optical waveguides (black). The chip performs operations crucial to the artificial neural network using tunable beam splitters, which are represented by the curved sections in the waveguides. These sections couple two adjacent waveguides together and are tuned by adjusting the settings of optical phase shifters (red and blue glowing objects), which act like 'knobs' that can be adjusted during training to perform a given task.

Stage of Research

The inventors have performed optical simulations to demonstrated proof of concept for this training technique by teaching an algorithm to perform complicated functions.

Applications

- Artificial Neural Network (ANN) training - optimize photonic hardware platforms for artificial intelligence and machine learning with end-user applications such as speech and image recognition

- Photonic devices - methods could be used for sensitivity analysis of any reconfigurable photonic system with end-user applications such as:

- LiDAR

- laser accelerators

- optical communications

- scientific research platforms

Advantages

- Efficient

- direct optical training realizes computational efficiency, speed and power advantages gained by using photonic circuits instead of traditional solid-state circuits for artificial networks

- training time reduced because parameters are adjusted in parallel

- Scalable for large networks

- training technique adjusts parameters in parallel, unlike brute force gradient methods which operate in serial

- scales with network size by operating in constant time with respect to the number of parameters

- Accurate - in-situ training on a physical device avoids model-based methods, which often do not perfectly represent the physical system

- Can be implemented with no additional hardware or components

Publications

- T. W. Hughes, M. Minkov, Y. Shi, S. Fan, “Training of photonic neural networks through in situ backpropagation and gradient measurement,” Optica, volume 5, issue 7, pages 864-871 (2018)

Related Links

Patents

- Published Application: 20210192342

- Issued: 12,026,615 (USA)

Similar Technologies

-

Systems and Methods for Activation Functions for Photonic Neural Networks S18-093BSystems and Methods for Activation Functions for Photonic Neural Networks

-

Phase Shifting by Mechanical Movement in Integrated Photonics Circuits S15-472Phase Shifting by Mechanical Movement in Integrated Photonics Circuits

-

Fully-automated design of grating couplers (software) S18-019Fully-automated design of grating couplers (software)